As a bit of background, I recently wrote about the Cluster API vSphere provider and how to deploy a Kubernetes cluster to a vSphere environment, otherwise this blog won’t make much sense. I also experimented with the use of a clusterResourceSet to automatically install the Calico CNI inside the workload cluster but this isn’t related to the issue at hand.

At the time of this writing, this issue is being worked on by the CAPV folks and should be resolved in a future release where it will leverage the vCenter certificate thumbprint.

Anyways, in the blog about CAPV, I show you how to deploy a kubernetes cluster to vSphere which works quite well. In short, what I didn’t show is that one of the vSphere CSI controller pod’s containers wouldn’t reach the ready state because it didn’t trust the vCenter certificate thumbprint, even though it is in the cluster definition manifest (that you originally set in clusterctl.yaml).

In order to fix this issue, I was helped by a colleague who is highly knowledgeable in Kubernetes and it was a great learning experience so I thought I’d go through the steps here as I enjoyed the process.

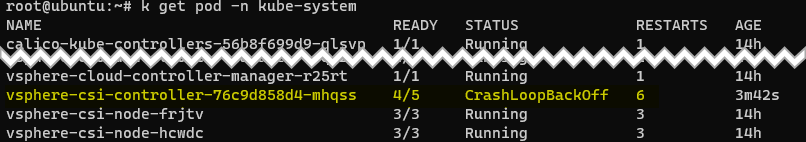

First, here is the pod not initializing, as you can see, 1 container out of 5 is not running.

kubectl get pod -n kube-system

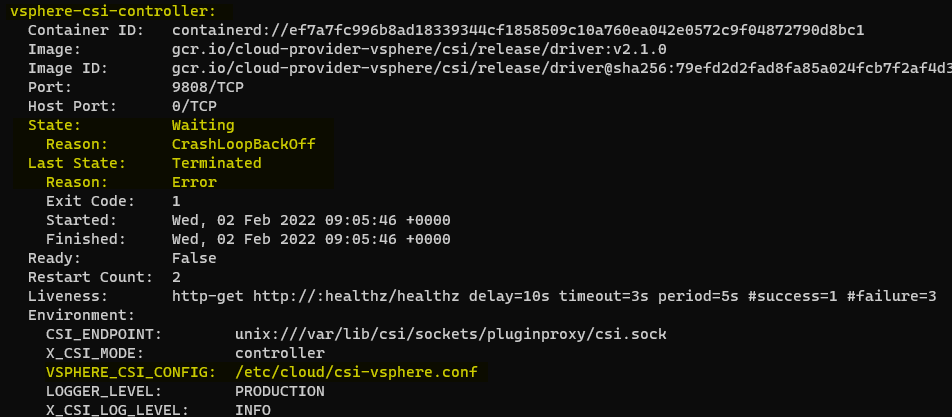

Use describe to get more information and we find that it’s the container named “vsphere-csi-controller” which is the problem.

kubectl describe pod vsphere-csi-controller-76c9d858d4-mhqss -n kube-system

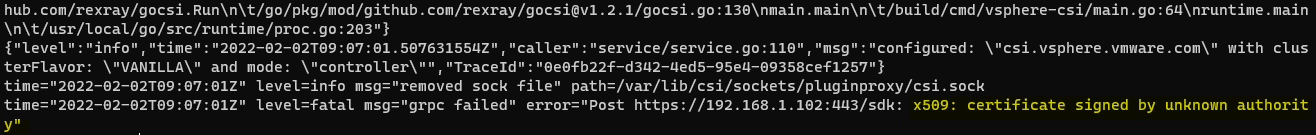

Display the logs of said container (you must specify the container if there are more than one in a pod) and we find that the vCenter (192.168.1.102) certificate is not trusted. Even though its thumbprint is in the cluster yaml manifest.

kubectl logs vsphere-csi-controller-76c9d858d4-mhqss -n kube-system -c vsphere-csi-controller

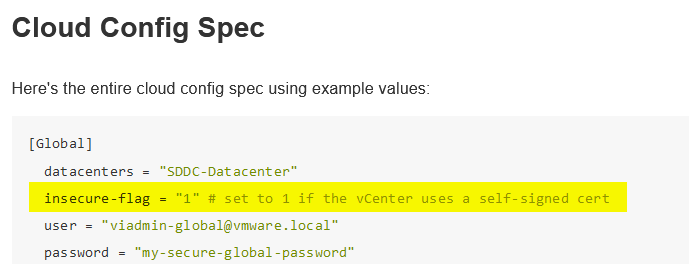

After a bit of digging we found, in the vSphere Cloud provider’s config specs, a line to bypass the error linked to certificates signed by unknown authorities.

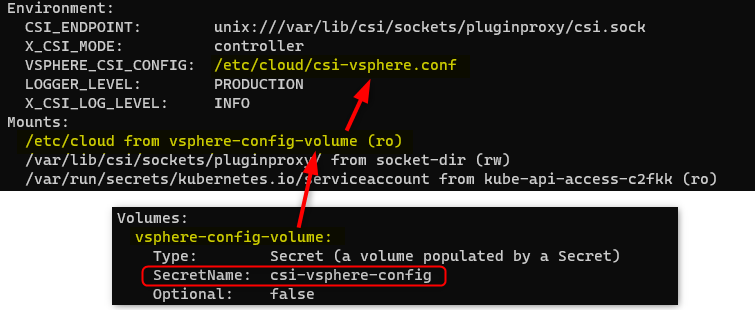

If we go back to the describe command, we find that the CSI controller configuration is done in /etc/cloud/csi-vsphere.conf which is mounted through a volume named vsphere-config-volume, which contains the csi-vsphere-config secret. So the answer must be in this secret.

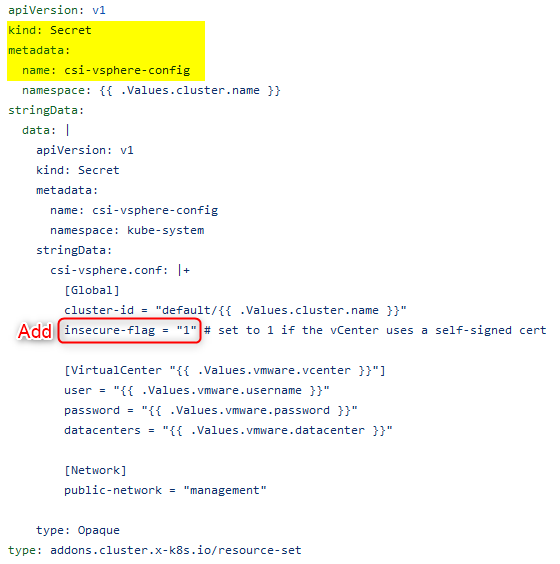

Now if we look in the cluster definition manifest, we find this secret which looks an aweful lot like the cloud config specs. So we can add insecure-flag = “1” under either global or VirtualCenter.

insecure-flag = "1"

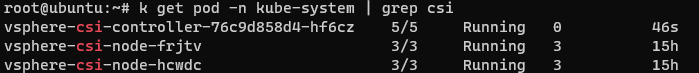

Then you just have to redeploy the cluster and the pod should initialize correctly.

Alternatively if you want to fix a running cluster, you can also edit the csi-vsphere-config secret in the kube-system namespace (you’ll have to decode and encode with base64) and then delete the csi-controller pod to get it recreated with the updated secret.

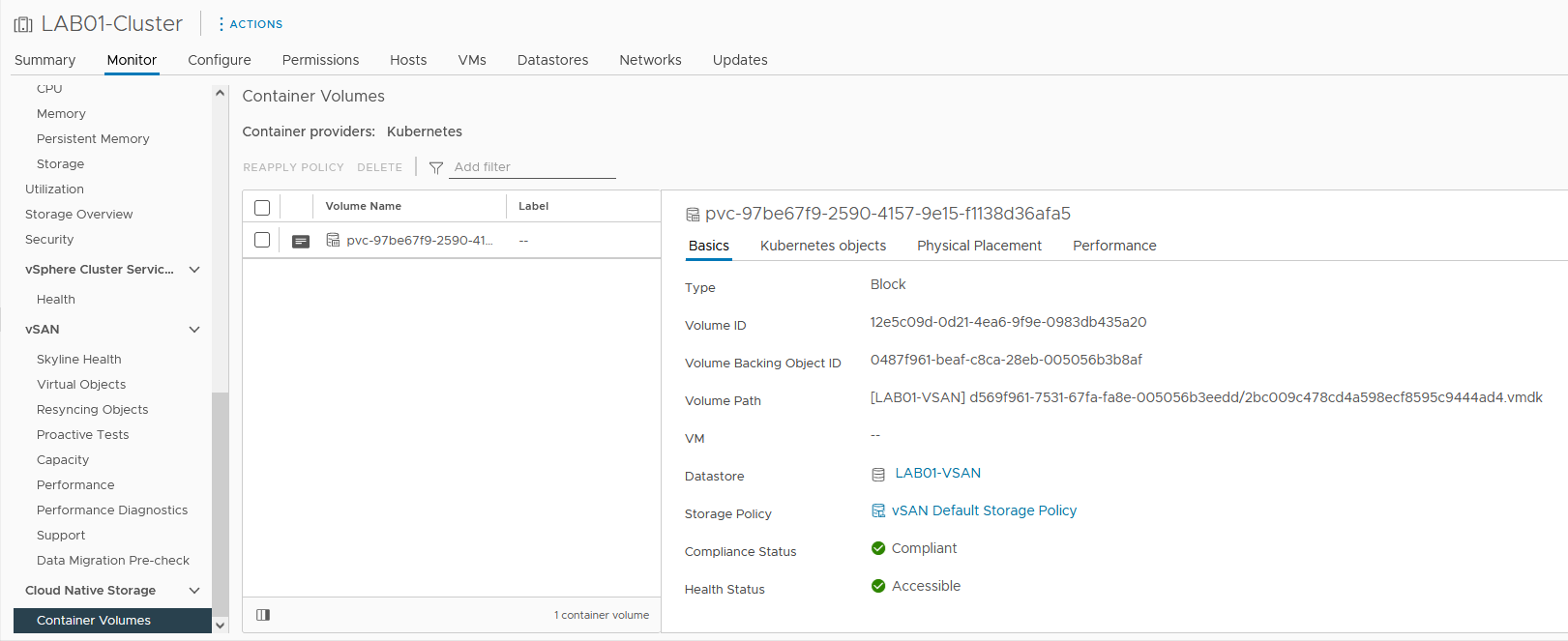

You can then start using the vSphere CSI driver to provision Cloud Native Storage in your environment but that will be the topic of another blog.